10x Productivity: AI Coding Tools CTOs Can't Ignore in 2025

“AI coding tools in 2025 are the ultimate collaborators—think of them as a genius pair-programmer who never sleeps. " - Sundar Pichai (CEO-Alphabet)

Open Source AI tools - such as Gemini and ChatGPT - are becoming as important as email and chat functions in the workplace. From data organization to content ideas, employees can leverage AI to enhance productivity, automate tasks, and generate insights.

It doesn't come without risk, however. Without proper guidelines, employees may inadvertently expose their organization to security threats, compliance issues, and misinformation. Developing a clear AI usage policy ensures employees understand how to use these tools responsibly while protecting the company from unintended consequences.

Employees should never input confidential, proprietary, or personally identifiable information into open-source AI systems. These tools often store and use data for model training, making it difficult to guarantee privacy. Any AI-generated output used for business decisions should be reviewed and validated before implementation to prevent leaks and misinformation. It's also important to have guidelines in the employee handbook that states the type of inputs employees can use in open source platforms - especially for marketing and product management teams, who may often use these platforms to generate new ideas.

It's also important for teams to understand the impact bias may have on the outputs generated in an open source platform. Employees should be trained to recognize and mitigate bias in AI-generated outputs, ensuring that decisions made with AI assistance align with ethical and regulatory standards. Companies should adopt fairness audits and bias detection tools to identify and correct problematic AI-generated content. Encouraging employees to question AI recommendations and consult human oversight on critical matters is essential for maintaining fairness and accountability.

AI-generated outputs should never be assumed to be completely accurate. Employees should verify all information before using it in reports, customer interactions, or decision-making processes. AI models can generate plausible-sounding but incorrect responses, often referred to as hallucinations. Companies should implement quality control measures, including AI literacy training and internal review processes, to prevent misinformation from spreading.

Employees should avoid using open-source AI tools to generate proprietary content without understanding potential intellectual property risks. Many AI tools do not clarify ownership of generated content, leading to disputes over originality and copyright. Organizations should outline specific guidelines on when AI-generated content can be used for business purposes and whether attribution is required. Reviewing terms of service for AI platforms and ensuring compliance with copyright laws helps prevent potential legal complications.

Not all AI applications are suitable for every business function. Companies should define acceptable use cases for open-source AI, such as brainstorming ideas, summarizing information, or drafting non-sensitive content. High-risk areas, including legal, financial, and regulatory matters, should require human review and approval. Clear policies help employees understand when AI is a helpful tool and when human expertise is required.

AI technology evolves rapidly, and company policies should keep pace. Regularly reviewing and updating AI usage guidelines ensures they remain relevant as new tools and risks emerge. Employees should receive ongoing training on AI best practices, security protocols, and compliance requirements. Executive teams should be trained on discussing open source AI policies with their teams, and there should be full alignment in leadership on the practices and standards determined by the organization.

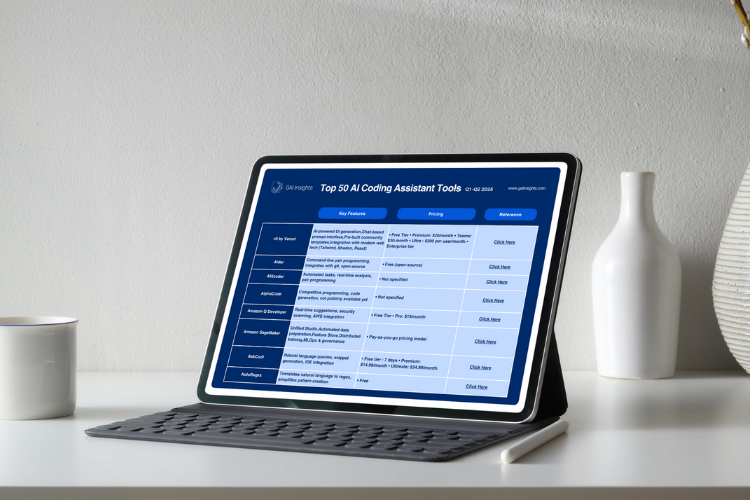

If organizations want to have the best guardrails available for their employees, they should provide secure AI platforms that align with data protection standards and clearly outline what types of data are permissible for AI interactions. If you're researching secure programs but are unsure where to begin, download our Secure Employee Chatbot Guide to fully understand what is available in terms of cost, capabilities, and levels of security.

“AI coding tools in 2025 are the ultimate collaborators—think of them as a genius pair-programmer who never sleeps. " - Sundar Pichai (CEO-Alphabet)

AI is revolutionizing how businesses operate, but there's one big catch: your AI is only as good as the data behind it. If your enterprise struggles...

Trusted by companies and vendors around the globe - we help you cut through the noise and stay informed so you can unlock the transformative power of GenAI .

Join us at this year's Generative AI World! Hear from enterprise AI leaders who are achieving meaningful ROI with their GenAI initiatives and connect in-person with the GAI Insights members community including C-suite executives, enterprise AI leaders, investors, and startup founders around the world